Happy New Year and Next Blog Posts

During New Year's Eve I was wondering what the actual origin story of this celebration was.

I’ve always found it a bit weird to celebrate the simple passing of time, much like our birthdays, actually.

So I checked online, and it seems that we mostly celebrate the new year coming for its "fresh start effect", allowing us to close the chapter of a year and dive into a new one.

It has apparently been celebrated for thousands of years and it originates from ancient Babylon!

I must say, It actually resonates in me!

So... happy new year and I wish you, dear reader, a very fruitful 2026!

In this blog post I will take the time to reflect about 2025 and mention the blog posts ideas that I have in mind for the future.

Last Year

From a technology perspective, last year was an interesting one. I got to use many new technologies in my side projects that I hadn’t used before, and I will explain them one by one.

Kind/K3D/K3S

While I have used Kubernetes at work for a few years now, I hadn’t taken the time to dive into k3s, which is a lightweight, single binary version of k8s.

That is no longer the case, as this website now runs on k3s!

For the anecdote, I used to run it on docker swarm but I realize that since I already work with kube at work, the switch from docker swarm to k3s wouldn't be that hard and I'd end up using something more future-proof than docker swarm.

I must say k3s is an amazing piece of technology. Having a lightweight kubernetes distribution that can run on a 4 GB VPS is a great user experience (I later benefited from a promotional offer and now have 8 GB of ram on my VPS, a true lap of luxury).

While I was previously using KinD for local development, I recently discovered k3d. It's a really helpful alternative, much closer to k3s (who would have guessed) and faster to spin than KinD. Also, using kubernetes kustomize makes it seamless to switch from local development to production.

Garage

2025 was not a good year for open source. We witnessed the slow-motion rot of Minio, steadily degrading its open source version throughout the year, with the final blow a few weeks ago where the Minio repository went into maintenance mode.

While I can understand that the people behind that project must provide food on their table and are probably pressured by VCs in these higher-interest, uncertain times, the way this was handled was... really telling. I think many people learned a valuable lesson here about what it means to willingly help a VC-backed company that requires contributors to sign shady CLAs.

Anyway, I decided to switch to Garage for storing the blobs of data for my website, and it turned out to be a single afternoon adventure.

I also follow RustFS closely but the same kind of CLAs as Minio exists here. In my - now "paranoid" - opinion, this is a sign of a potential future rug pull.

Garage is great, it's AGPLv3, the people behind it seem to have a non-profit mindset and vision for open source, and there are no CLAs that contributors must signed. That looks like true FOSS to me.

Meilisearch & Sequin

I discovered Meilisearch and Sequin in December, and I integrated both tools into my website in a single day. I wanted to give readers the ability to perform full-text searches on the articles I wrote. I could have used Postgres, but its full-text search functionality is not known for offering the best user experience (typo tolerance, search as you type, etc).

My first architectural iteration involved having my backend synchronize data between Postgres (where articles are stored) and Meilisearch. However, this required implementing synchronization logic to prevent Meilisearch from serving stale information. I quickly realized I needed a single source of truth, and that role belongs to Postgres. Meilisearch was meant to be a satellite service.

The best pattern for this kind of architectural constraint is Change Data Capture (CDC), which is why I chose to use Sequin. It provides a direct connection between Postgres and Meilisearch without requiring a broker in between.

Meilisearch is amazing and runs fine on my small 8 GB vps with a pod requesting only 1 GB. Sequin, on the other hand, is a bit more resource-hungry and requires 2 GB. That's a total of 3 GB out of my 8 GB budget, quite a lot for a "nice to have" feature but the comfort of my reader is of paramount importance!!

In practice, both services consume very little when idle and work extremely well together. My backend logic could remain unchanged: it only interact with Postgres, while CDC handles synchronization with Meilisearch.

Svelte & SvelteKit

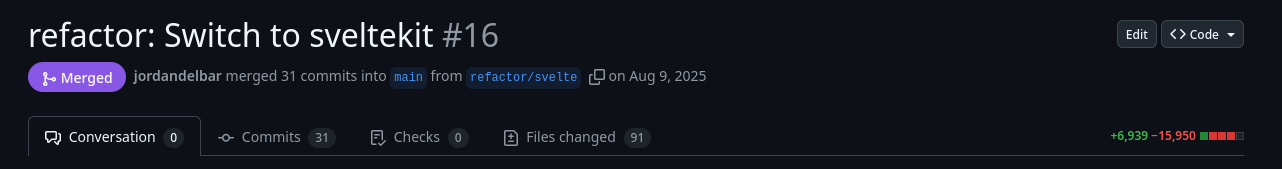

The first version of this website was built with React. I then switched to Vue, and later to Nuxt. I’m not a frontend engineer, and I was honestly skeptical about all the magic happening in these frameworks, especially the higher-level ones like Nuxt. There are probably good reasons for using these that completely elude me.

Anyway, mid-year I discovered Svelte and decided to port my website from Nuxt to SvelteKit, as the syntax felt much more “logical” to me. Svelte is far more understandable than the other frameworks I’ve worked with, and the reduction in lines of code for the same result is actually staggering.

Tailscale

I mentioned k3s earlier, and honestly, Tailscale deserves its own paragraph. It helped me a lot in securing my VPS. I’m not exposing port 22 publicly, my control plane is behind a firewall, and Tailscale makes all of this seamless, and free. It’s truly amazing software.

That said, it has now become my single point of failure, to be honest.

Next Year

Enough about last year!

In the coming year, I have a few projects in mind. In my first post, I mentioned showing how to deploy machine learning model with k8s. Well I have currently been working during the holidays on a project similar to my yolo-tonic implementation, but this time using a RT-DETRv2 model.

This project uses IPC with mmap, FlatBuffers and semaphores with mqueue in place of using gRPC over the network, to squeeze out as much performance as possible and avoid costly serialization and network latency. I intend to make it public in the next couple of months.

This project is built to run on k3s, so you'll see how to deploy ONNX Runtime services with k3s, if that is something that interests you!

After that I'm not exactly sure what project I'll work on, to be honest. I might finally take a look at LLMs inference services like vLLM, as I sometimes feel a bit out of the loop on that front.

A few months ago I started reading Build a Large Language model from scratch by Sebastian Raschka. I really enjoyed his Machine Learning with Pytorch and Scikit-Learn book a few years ago, so I definitely recommend reading both if you like ML/AI.

In any case my goal this year is to keep improving my Rust skills and continue working on these system-levels topics, as that's something I genuinely enjoy. Search and vector databases have also caught my interest, so I might explore those as well! II also plan to write an article about different serialization formats like Protobuf and Avro, and their backward and forward schema evolution.

If you have any areas you'd like me to cover, feel free to ping me on my contact page!

Anyway! Time to wrap this up. Once again, I wish you a very happy new year, and I hope all your resolutions come true! Take care